EKS Spot Instances: Strategies And Best Practices To Save Big

Leveraging AWS spot instances in your EKS environment can lead to substantial cost reductions by tapping into spare EC2 capacity at deeply discounted rates. With realistic savings up to 60% on average compared to on-demand pricing, EKS spot instances present an enticing option for the right workloads. However, because these instances can be reclaimed with a short two-minute warning, a well-thought-out strategy is essential to ensure uninterrupted performance and availability.

This article presents a concise, actionable guide to help you integrate spot instances into your EKS clusters. It covers the fundamentals of spot instances, offers strategies for maximizing savings, explains how to build fault-tolerant architectures, and highlights common pitfalls to avoid.

Understanding EKS Spot Instances

AWS spot instances provide an opportunity to run non-critical, fault-tolerant workloads at a fraction of the cost of on-demand instances. Their pricing is dynamic, reflecting real-time supply and demand in AWS’s spare capacity pools. This makes them particularly attractive for workloads that can gracefully handle interruptions.

Before diving into EKS spot instance implementation, consider these essential points:

• Dynamic Pricing: Spot instance rates fluctuate based on demand and supply.

• Interruption Risk: AWS can reclaim capacity with just a two-minute warning.

• Diverse Capacity Pools: Different instance types and Availability Zones (AZs) have their own spare capacity pools.

These characteristics imply that successful use of spot instances depends on flexibility and diversification. By designing your infrastructure to tolerate interruptions, you can fully leverage the cost benefits without compromising reliability.

Strategies for Maximizing EKS Spot Instance Savings

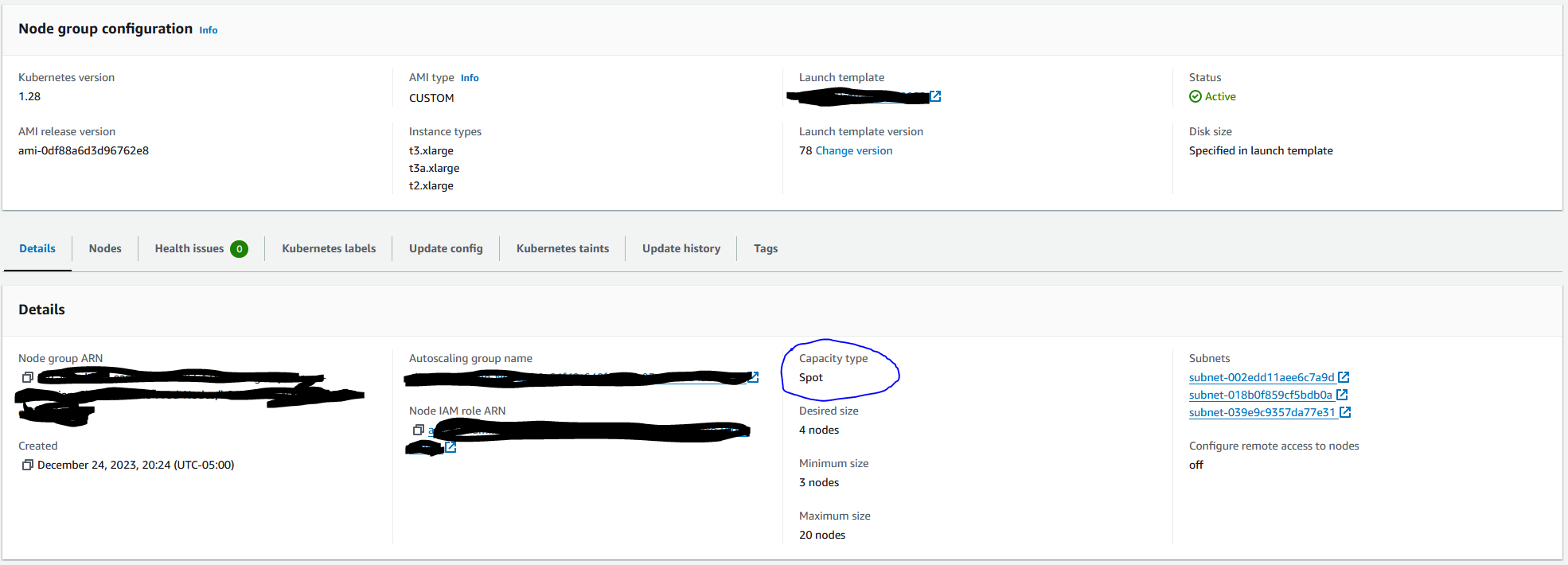

To reduce costs with EKS spot instances, use a mix of options. Relying on one instance type or a single Availability Zone can expose you to capacity shortages and sudden terminations. Instead, spread your workload across different choices.

Here are a few tactics to help you do this:

• Diversify Instance Types: Choose several instance families and sizes that fit your application’s needs.

• Distribute Across Availability Zones: Run workloads in at least two or more AZs. This approach improves capacity and lowers zone-specific risks.

• Leverage Auto-Scaling Groups: Set up auto-scaling that uses both on-demand and spot instances to offer a safety net during interruptions.

• Monitor Market Trends: Check spot price histories regularly and adjust your instance choices as market conditions change.

Using these tactics can boost your chances of keeping steady capacity while enjoying the savings of spot instances. By varying and monitoring your deployments, you build a strong foundation that adapts to changing market conditions.

Building Fault-Tolerant Architectures

Since interruptions are inherent with spot instances, designing for resilience is critical. Ensuring that your applications can handle instance terminations gracefully will prevent downtime and data loss.

Key measures to enhance fault tolerance include:

• Prepare for Interruptions: Use Kubernetes features such as pod disruption budgets and rolling updates to minimize the impact of a spot instance being reclaimed.

• Automate Recovery: Implement node termination handlers that detect the two-minute warning and gracefully drain and reschedule pods.

• Design for Statelessness: Whenever possible, design your applications to be stateless. For stateful workloads, consider using external storage like AWS EFS or robust data replication strategies.

• Enable Horizontal Pod Autoscaling: This ensures that your application dynamically adjusts to varying loads, compensating for any instance interruptions.

Incorporating these practices into your architecture not only safeguards your applications from potential disruptions but also allows you to take full advantage of the cost efficiencies of spot instances. A resilient design means that even if individual instances are lost, your overall system remains robust and responsive.

Avoiding Common EKS Spot Instance Pitfalls

While the benefits of EKS spot instances are significant, a few common mistakes can undermine their effectiveness. Avoiding these pitfalls will ensure that your transition to spot instances is smooth and sustainable.

Consider these points of caution:

• Over-Reliance on a Single Instance Type or AZ: Concentrating your resources in one area increases vulnerability to spot market volatility.

• Insufficient Monitoring: Without proper tracking of performance and cost metrics, you may be blindsided by sudden price changes or interruptions.

• Lack of Automation: Manual handling of spot instance interruptions can lead to prolonged downtime; automation is essential for quick recovery.

• Neglecting Continuous Optimization: The spot market is dynamic. Regular reviews and adjustments to your strategy are critical for long-term success.

By being aware of these potential challenges and implementing proactive measures, you can minimize disruption and maintain high service levels while optimizing costs.

Final Thoughts: Build Resilient, Cost-Efficient EKS Clusters

Integrating AWS spot instances into your EKS clusters offers a powerful way to reduce costs without sacrificing performance. By understanding the dynamics of spot pricing, diversifying your instance types and AZs, and designing for fault tolerance, you can build an infrastructure that is both resilient and budget-friendly.

Embrace the potential of EKS spot instances to transform your EKS clusters into a lean, agile, and cost-efficient solution, and take your cloud strategy to the next level.