AWS S3 Cost Optimization: Drastically Reduce Your Cloud Storage Costs

Amazon Web Services (AWS) Simple Storage Service (S3) remains an indispensable tool for both businesses and individuals. It provides scalable, reliable, and secure object storage. S3 supports many use cases, from hosting static websites to storing massive datasets for big data analytics. As your data grows your AWS bill will likely increase and S3 is typically one of the largest cost drivers, that is why S3 cost optimization is an absolute must.

This post provides actionable strategies to manage and reduce your AWS S3 costs. We explore various S3 storage types, discuss lifecycle management and versioning, and share real examples of cost savings through smart data handling. Whether you are a small business owner, a cloud architect, or an IT professional, these insights will help you get the most out of AWS S3 while controlling expenses.

Understanding AWS S3 Storage Types

Effective S3 cost management starts with knowing the available storage classes and their best use cases. AWS offers multiple storage classes that balance cost, access frequency, and data durability. Selecting the right storage class can reduce your expenses while maintaining performance.

Consider S3 Standard, which provides high durability and fast access for frequently used data. In contrast, classes like Intelligent-Tiering and Standard-Infrequent Access (IA) work well for data that is accessed less frequently. These options lower costs by moving data automatically or manually to cheaper tiers.

Key considerations for storage classes:

• S3 Standard: Best for data you access often; high performance and availability.

• Intelligent-Tiering: Automatically shifts data between frequent and infrequent access tiers based on use.

• Standard-IA and One Zone-IA: Ideal for infrequently accessed data; One Zone-IA reduces cost further by using a single Availability Zone.

• Glacier & Glacier Deep Archive: Perfect for long-term archiving where data retrieval can take longer.

Choosing the appropriate storage class based on your access patterns helps keep storage expenses in check while ensuring your data stays available when needed.

AWS S3 Lifecycle Management and Versioning

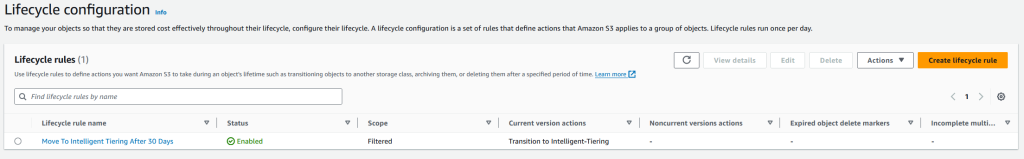

Managing your data lifecycle is crucial for cost control in AWS S3. Lifecycle management lets you automate how objects move between storage classes and delete them when no longer needed. This strategy keeps your storage lean and cost-efficient.

Lifecycle policies allow you to set rules that transition data to cheaper classes after a set period or remove outdated objects. They work well for temporary files, logs, and rarely accessed data. Versioning preserves every update but can drive up costs if you let old versions accumulate unchecked.

Key points on S3 lifecycle management and versioning:

• Transition Actions: Automate data movement to cost-effective storage classes after a specific time.

• Expiration Actions: Automatically delete objects or outdated versions to free up space.

• Noncurrent Version Transitions: Move older versions to lower-cost classes.

• Versioning Impact: Manage versions carefully to prevent unnecessary storage expenses.

When you apply these lifecycle policies actively, you reduce costs while keeping data durability intact.

Actionable Recommendations for AWS S3 Cost Optimization

AWS S3 cost optimization goes beyond understanding storage types. You need to implement practical steps that control expenses. This section provides recommendations and a real-world case study to illustrate how to achieve significant savings.

For example, set lifecycle rules to move data from S3 Standard to Standard-IA after 30 days and to Glacier after 90 days. Also, monitor your S3 usage using AWS analytics and set up alerts to catch inefficiencies early. These actions help you adjust your strategy quickly and effectively.

Key recommendations to cut AWS S3 costs:

• Implement Lifecycle Policies: Automate transitions and expiration to lower-cost storage tiers, especially for older or non-current versions of data if applicable.

• Optimize Data Transfer: Stay within the same region and leverage CloudFront to cache content.

• Monitor Usage: Use AWS Budgets and S3 Analytics to track and optimize your storage.

• Tag and Group Resources: Categorize S3 buckets to better manage and allocate costs.

Following these practical steps can help you manage your storage expenses and let you reinvest savings in other critical areas.

Identifying and Managing Large S3 Buckets

Managing large S3 buckets is essential to prevent runaway costs. AWS provides tools like S3 Storage Lens and S3 Analytics that offer insights into storage usage and cost-saving opportunities. Regular audits of your buckets can reveal data that you no longer need or that can shift to a cheaper tier.

Using CloudWatch metrics also helps you monitor bucket sizes and data flow. This approach enables you to make smart decisions about data retention and transitions, ensuring efficient storage management over time.

Key strategies for managing large S3 buckets:

• S3 Storage Lens: Gain comprehensive insights into storage use.

• S3 Analytics: Identify which data benefits from a storage class change.

• CloudWatch Metrics: Track bucket sizes and data movement continuously.

• Regular Audits: Review your buckets often to enforce lifecycle policies.

Actively managing your large S3 buckets allows you to optimize your storage and lower your overall AWS bill.

Terraform S3 Lifecycle Rule Examples

Automating lifecycle policies with Terraform simplifies cost management and enforces consistency. Below are two Terraform examples: one for a standard bucket with lifecycle rules for logs, and another for an archive bucket with versioning.

The first snippet creates an S3 bucket and sets a lifecycle rule for log data. It moves objects to Standard-IA after 30 days and deletes them after 90 days.

Key aspects of Terraform for S3 lifecycle:

• Automation: Apply lifecycle rules consistently.

• Cost Efficiency: Transition data to cheaper storage classes automatically.

• Resource Management: Handle versioning and expiration with code.

• Reproducibility: Maintain consistent policies across your infrastructure.

The following Terraform snippet creates an S3 bucket with a lifecycle configuration for log data:

resource "aws_s3_bucket" "example_bucket" {

bucket = "your-unique-bucket-name-here" # Ensure the bucket name is unique globally

}

resource "aws_s3_bucket_lifecycle_configuration" "example_lifecycle" {

bucket = aws_s3_bucket.example_bucket.id

rule {

id = "log"

enabled = true

filter {

prefix = "log/" # Apply this rule to objects with the 'log/' prefix

}

transition {

days = 30

storage_class = "STANDARD_IA"

}

expiration {

days = 90

}

}

}For archival purposes, this snippet sets up a bucket with versioning enabled and a lifecycle configuration that transitions noncurrent versions to Glacier:

resource "aws_s3_bucket" "archive_bucket" {

bucket = "your-unique-archive-bucket-name"

versioning {

enabled = true

}

}

resource "aws_s3_bucket_lifecycle_configuration" "archive_lifecycle" {

bucket = aws_s3_bucket.archive_bucket.id

rule {

id = "archive_rule"

enabled = true

noncurrent_version_transition {

noncurrent_days = 30

storage_class = "GLACIER"

}

noncurrent_version_expiration {

noncurrent_days = 365

}

}

}Using Terraform not only automates these processes but also ensures that your cost optimization policies are maintained consistently across your AWS S3 resources.

Moving Forward with S3 Cost Optimization Strategies

Adopting a multifaceted approach to S3 cost optimization is essential to make the most of your cloud storage while keeping expenses in check. By understanding the various storage classes, implementing robust lifecycle management, and leveraging automation tools like Terraform, you can significantly reduce your AWS S3 costs without compromising on data accessibility or performance.

Embracing these strategies will enable you to allocate resources more effectively, allowing you to invest savings in other critical areas of your business or projects. Continuous monitoring and periodic reviews of your storage practices will ensure that your cost optimization efforts remain effective as your data needs evolve.